|

Zhengtong Xu 徐政通 Email: xu1703 AT purdue.edu I'm a third-year PhD candidate at Purdue University, advised by Professor Yu She. I was a recipient of the 2025 Magoon Graduate Student Research Excellence Award at Purdue University (awarded to only 25 PhD students across the entire Purdue College of Engineering). I received my Bachelor's degree in mechanical engineering at Huazhong University of Science and Technology. G. Scholar / Twitter / Github / LinkedIn |

|

News

|

Research* indicates equal contribution. My research focuses on designing learning algorithms that enable physical robots to perform everyday manipulation tasks with human-level proficiency. To this end, I am currently exploring multimodal robot learning. |

|

LeTac-MPC: Learning Model Predictive Control for Tactile-reactive Grasping

A generalizable end-to-end tactile-reactive grasping controller with differentiable MPC, combining learning and model-based approaches. |

|

|

DiffOG: Differentiable Policy Trajectory Optimization with Generalizability

DiffOG introduces a transformer-based differentiable trajectory optimization framework for action refinement in imitation learning. |

|

|

Canonical Policy: Learning Canonical 3D Representation for Equivariant Policy

Canonical Policy enables equivariant observation-to-action mappings by grouping both in-distribution and out-of-distribution point clouds to a canonical 3D representation. |

|

|

ManiFeel: Benchmarking and Understanding Visuotactile Manipulation Policy Learning

ManiFeel is a reproducible and scalable simulation benchmark for studying supervised visuotactile policy learning. |

|

|

UniT: Data Efficient Tactile Representation with Generalization to Unseen Objects

Learn a tactile representation with generalizability only by a single simple object. |

|

|

VILP: Imitation Learning with Latent Video Planning

VILP integrates the video generation model into policies, enabling the representation of multi-modal action distributions while reducing reliance on extensive high-quality robot action data. |

|

|

Safe Human-Robot Collaboration with Risk-tunable Control Barrier Functions

We address safety in human-robot collaboration with uncertain human positions by formulating a chance-constrained problem using uncertain control barrier functions. |

|

|

LeTO: Learning Constrained Visuomotor Policy with Differentiable Trajectory Optimization

LeTO is a "gray box" method which marries optimization-based safety and interpretability with representational abilities of neural networks. |

|

|

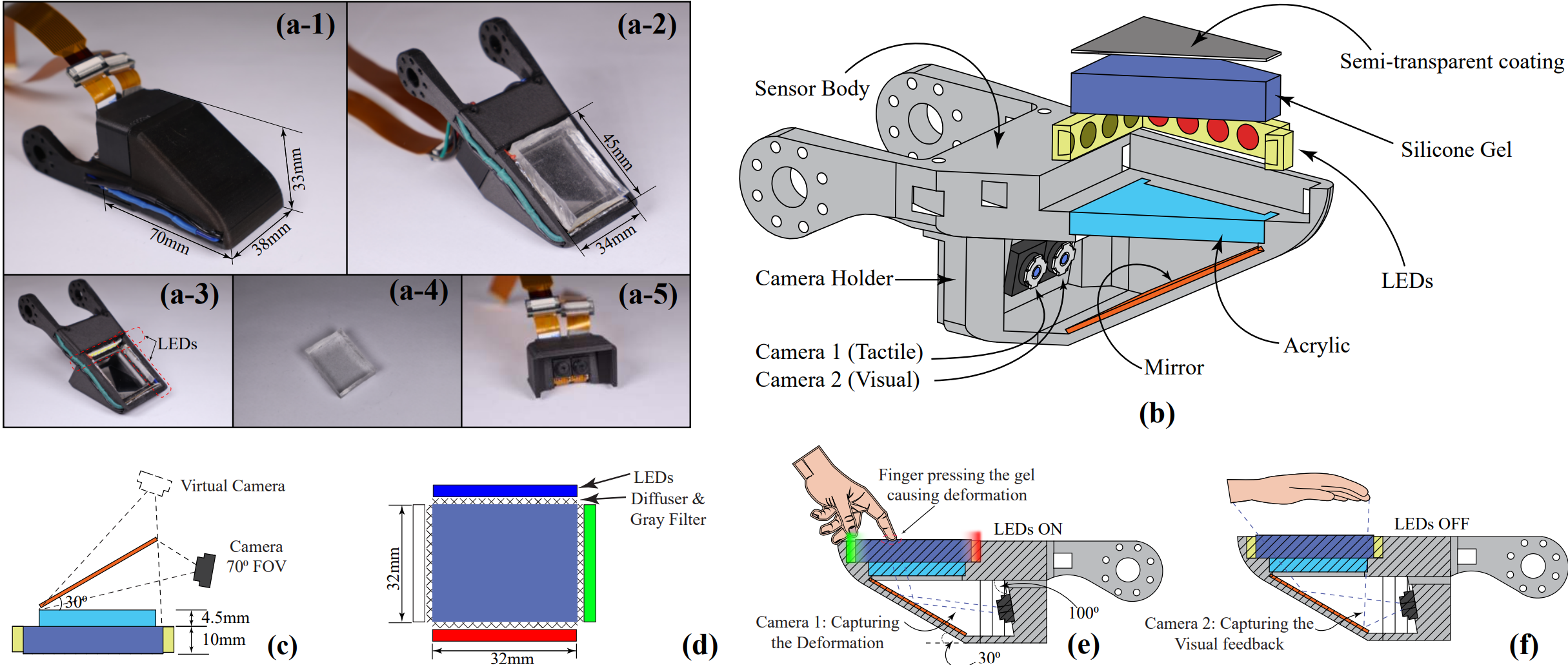

VisTac: Toward a Unified Multimodal Sensing Finger for Robotic Manipulation

VisTac seamlessly combines high-resolution tactile and visual perception in a single unified device. |

Awards

|

Reviewer Service

|

Teaching

|

|

Website template from Jon Barron's website. |

Meta Reality Labs

Meta Reality Labs